then write your review

UltData - Best iPhone Data Recovery Tool

Recover Deleted & Lost Data from iOS Devices Easily, No Backup Needed!

UltData: Best iPhone Recovery Tool

Recover Lost iPhone Data without Backup

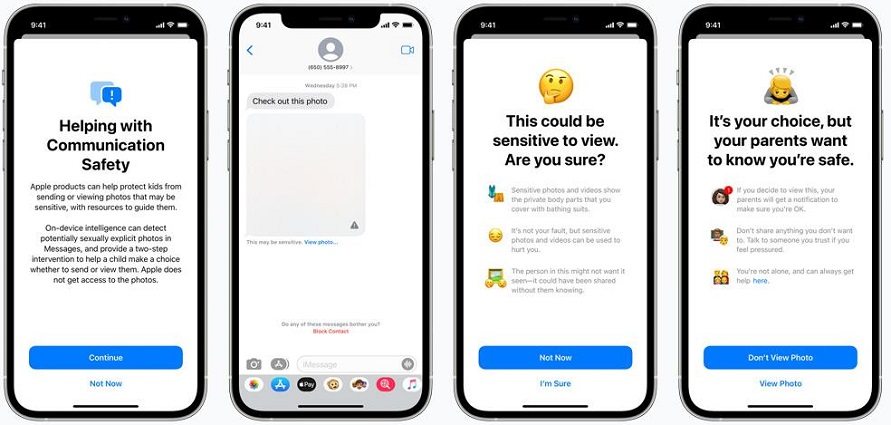

There is a new set of technological measures have been introduced by Apple to prevent child abuse. Machine learning will be used in the opt-in setting in the family iCloud accounts to detect any nudity in the images. The system will block such shots from being received or sent, display warnings, and alert the parents that the child has sent or viewed them.

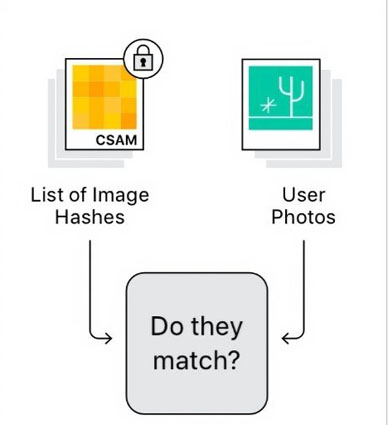

Apple has clarified that none of these features endanger the user privacy for dealing with the CSAM. Clever cryptography will be used for the iCloud detection mechanism to prevent the scanning mechanism from accessing any images that are not CSAM. Apple is sure that the new system could play a significant role in child exploitation, as it can disrupt any predatory messages, and it includes endorsements from a number of cryptography experts.

Basically, there have been three separate updates announced by Apple, and each of these is related to child safety. CSAM is the most significant one of these updates, as it has been able to get plenty of attention, and it is a particular feature that is able to scan the iCloud photos. This feature can compare the users' images against a database of the material identified previously. A review process is triggered when a number of specific images are detected.

Apple will suspend the iCloud account if the images happen to be verified by the human reviewers, and this will automatically be reported to NCMEC or National Center for Missing and Exploited Children. There are also communication safety features for the messages app that will enable the app to detect when any photos are sent or received by children that are sexually explicit.

There will be a warning upon receiving the explicit image by the messages app for the children who are older than 13. Other than that, Apple is also updating its research capabilities and Siri to intervene in queries regarding CSAM. Siri will be able to provide links to the resources if one needs to know how to report the abuse material.

Although many people mostly agree that this particular system is appropriately limited in scope, a number of watchdogs, experts, and privacy advocates are basically concerned about the system's potential for abuse. A number of Cloud storage providers like Microsoft and Dropbox are already performing image detection on their servers. Therefore, many people agree and disagree and even think of switching to Android phones if things continue. Also, there are various disputes about the Apple CSAM; so, different people have given mixed reviews.

Princeton University has two academics who claim that they definitely consider Apple's CSAM to be vulnerable as they have previously developed one like that. According to them, the system worked the same way, but they had quickly spotted a glaring problem.

There are many iPhone and iPad users out there who might be worried about the detection feature that may soon be rolling out when the next major update of iOS and iPadOS will occur. There is still an option that is available to stop Apple from scanning anyone’s personal photos. It is worth noting that this CSAM tool only looks into those images that you upload on iCloud. In order to make things clear, no photos will be scanned by the detection system that the user will send through end-to-end encrypted apps like Whatsapp or Telegram. But still, it might be contrary to the early warnings, which say that it may be able to infiltrate end-to-end encryption, thus making it a proper function.

If you've already syned photos to iCloud and would like to stop the detection tool from scanning the photos, you need to take the following steps:

To get the media from the iCloud library, you will just be required to click on 'Download Photos and Videos'.

There is a strong denial from Apple regarding degrading any privacy or walking back to any previous commitments. A second detailed document has been published by the company in which it addresses most of the claims. Apple still emphasizes that it only compares the user photos against any collection of child exploitation material that is known. Therefore, a report will not be triggered if there are one’s own children’s photos.

Apple has also clearly said that the odds of a false positive are one in a trillion when someone considers the fact that a certain number of images are required to be detected for triggering a review. Although Apple has not provided much visibility to the outside researchers about how all of this works, yet they are basically saying that we need to take their word on that.

Furthermore, Apple is saying that the manual review relies on human reviewers, and it is able to detect if CSAM was on a device because of any malicious attack. The company will be refusing to cooperate with the governments and the law enforcement agencies because, in the past, many institutions have asked them to make changes to degrade user privacy.

For the past few days, Apple keeps on offering clarity regarding the CSAM detection feature that it has announced. It has clearly been said that CSAM applies only to the photos stored in iCloud and not the videos, while the company also defends its CSAM implementation as privacy-friendly and privacy-preserving as compared to the other companies that are offering it. However, the company has also acknowledged that a lot more can be done in the future and the plans can be expanded and evolved over time. Further explanations about CSAM are coming over time, so we can wait for them to get more clarity.

We value your opinion on the latest developments regarding CSAM that Apple has made, and therefore, we would like you to give your comments below on the Child Safety updates by Apple.

then write your review

Leave a Comment

Create your review for Tenorshare articles

By Anna Sherry

2025-12-19 / iOS 15